A quick comment I made in my article about the iPhone 6 got one reader with design experience in displays taking me to task. Yes, I have some explaining to do.

What I wrote was something along the lines of “you only need 8mp for a 4K display.” I’ve written that before, and in one sense, it’s absolutely right. Giving most 4K displays anything other than an 8mp RGB file really doesn’t gain you anything useful at the moment.

However, there’s more going on in the technical side than just pixel count. It’s dots versus Bayer that need to be discussed. So let’s do that.

A 4K video display technically has 11,520 dots on each of 2160 lines. A “dot” can be red, green, or blue, and is actually a tall rectangle, not a dot. To drive a 4K display perfectly, you’d need to give it 24,883,200 pieces of information (24.9m).

At the other end, a D810 shooting in 16:9 aspect ratio has 7360 pieces of information on each of 4140 lines, or 30,740,400 pieces of information (30.5m).

The problem is that the capture and display don’t align. The Bayer pattern on the D810 means we have 3680 green samplings on 4140 lines, and we need to produce 3840 on 2160 lines. Worse, we only have 3840 red and blue samplings on 2070 lines.

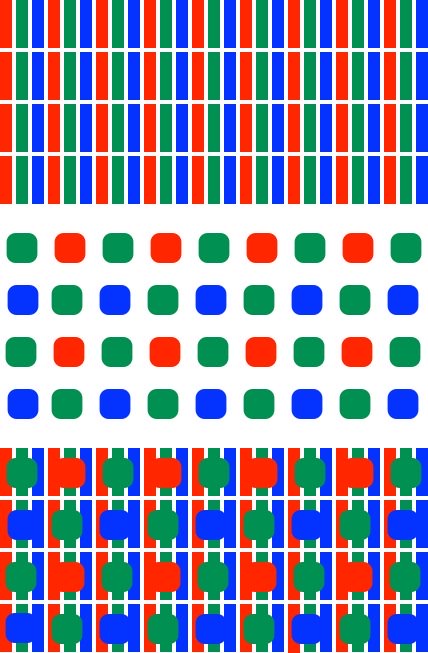

Let’s illustrate the problem. Top is a 4K display. Middle is a Bayer capture at the “same pixel count.” Bottom is something akin to the Bayer mapped to the 4K if both were producing the same pixel count (and remember, the best camera we have today doesn’t align this well):

It would be interesting to have a software program that took a D810 NEF file directly and put the implied 3680 x 2070 data set cropped into the 3840 x 2160 display. We’re good on the luminance data, but we’re going to have to do some work with vertical data. Still, we would probably produce a cleaner, better looking image than we can get by handing the display an 8mp RGB JPEG, which has been aliased, downsized, and compressed.

The problem is that the television makers have pretty much set their sights on 4K as their next possible salvation for new sales, and they’re chomping at the bit for content that might deliver something that really makes those displays pop. Stuffing an 8mp iPhone image on it is a start, but there’s not enough original data to truly bring out what the display is capable of.

Coupled with this are other issues. 4K video (as recorded by most currently available cameras) isn’t actually fully color capable. Most of it is 4:2:2 color best case (and often 4:2:0), meaning that while the luminance information (green) is there, the color information (red/blue) is compromised.

Worse still, we have no real delivery system to the 4K display itself that doesn’t also add in compromises (no 4KDVD, no uncompressed cable stream, etc.). Sure, I can put a state-of-the-art computer with the right HDMI cable directly hooked to my 4K display and “deliver” my own content without problems, but that, too, isn’t without issues. I fill entire RAID enclosures with the 1080P video I’m shooting/editing these days for clients; to do the same with 4K would require massive amounts of drive rack space for me.

So we have three things that come into play:

- Capture — Even the current 36mp champion sensor is a little short on information for a true 4K display.

- Transfer — We have no pragmatic mechanism for getting a full capture to our high resolution display.

- Display — If we have a 4K display, we’re likely providing it sub-optimal information that the display then tries to reinterpret upwards (e.g. Samsung’s UHD Upscale function).

Now, you’d think that the consumer electronics makers would get together and actually do a better job of trying to conform capture and transfer to display—e.g. “standards”—but the funny thing is that the standards aren’t conforming at all, and not even really compatible in many cases. So what’s happening? We press upwards with pixel count because if you eventually get enough pixels, you kind of force the issue (e.g. a D900 will have enough data to fully drive a 4K display, and the GH5 or some such video-enabled camera will move towards 4:4:4 raw capture in 4K resolutions).

What’s being forgotten here is the lesson learned in the High Fidelity business by the very same consumer electronics players: lowest common denominator and convenience wins the mass market. High quality and the discipline it requires eventually becomes a much smaller, high-priced niche.

Thus, when I wrote my line about an 8mp camera being “enough” for 4K displays, I was thinking about the lowest common denominator. Frankly, a well-captured and managed 8mp image displayed properly on any current 4K display makes for a bigger, brighter, more engaging presentation than the photos hanging on most folk’s walls, and we’re already at the point where we can easily move that data from cloud photo storage to your living room display. Most folk would be perfectly happy with that and aren’t exactly going to be chasing walk-up-to-the-display-and-see-almost-reality levels of production that they have to pay much more money for.

So the sensor makers, like the display makers, wander mindlessly off into the “more” territory and actually add problems for the user, not solve them. No wonder it’s tough to sell a TV or camera these days.

What gets us to a usable parity? 7680 x 4320 (33.2mp) in a Bayer-type array would do it, though we’d be in the 16:9 format. So a D810 is close, as I note. But not quite right.